Note: Woven Planet became Woven by Toyota on April 1, 2023.

By Luca del Pero and Ro Gupta

The intersection of maps and autonomy is an important — though oft overlooked — subject. To the lay observer, maps are simply navigational tools; and even to the more savvy, maps are often seen as simply a component — albeit an important one — in a vehicle’s localization, perception and planning stack.But maps can do much more. At Woven Planet we have been using maps to build a new, more effective, more scalable approach to autonomy; and we wanted to share some thoughts on this approach — what we call the behavioral model of driving (“BMOD”) — and the important role maps play.

Modeling Driving Behaviors

How Woven Planet is charting a new approach to autonomy

First a few words about the BMOD itself, and how it differs from some of the other approaches to automated- and autonomous driving. In broadest terms, the key differentiator is the level of engineering involvement in defining the behavior of the self-driving system.

Think of engineering involvement as a spectrum: On one end, you have rules-based approaches, where engineers hand-define a set of rules that strictly prescribes the vehicle’s driving behavior for every driving event. Machine learning plays a supporting role, limited to certain functions like vehicle deceleration, but the bulk of driving decisions are controlled by these pre-established rules. The key benefit of this approach is interpretability: as discrete code, rules-based approaches are far easier to disentangle and decipher, and offer significant advantages in terms of modularity and troubleshooting. At the same time, engineers developing rules-based systems must anticipate and design by hand the rules to handle the full range of driving scenarios a vehicle will have to negotiate. As such, rules-based systems are inherently brittle: as scenarios become more complex and/or more varied, the rules become increasingly difficult to define.

At the other end of the spectrum, is end-to-end machine learning. Here, deep neural networks learn to develop driving decisions from real-world driving demonstrations, significantly reducing the reliance on hand-designed rules. These approaches typically use a single model that converts sensor inputs into control outputs. As a result, they require far less hard coding and they can continuously improve if fed with more training data (without needing to re-design the system). Yet this approach, too, has its limitations: unlike rules-based methodologies, ML models are inherently opaque, making it difficult to determine how a given decision was reached based on an ML model’s intermediate outputs. As a result, individual aspects of a model can be difficult to isolate, evaluate and debug. Moreover, these models require large amounts of highly diverse training data to handle the full range of potential driving domains to be viable. This is especially true in our case — as a subsidiary of the world’s largest automaker, our scope is worldwide, across all road classes.

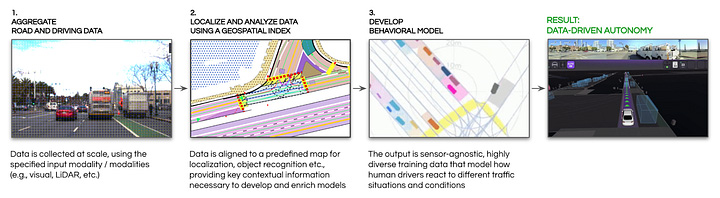

So we’re charting a blended approach — the behavioral model of driving — which strikes a balance between hard coding and machine learning. This model uses an intermediary behavioral representation between sensor input and control output. This representation translates raw sensory data into a 3D representation that encodes all relevant information — including where the vehicle is and how it is moving, as well as the surrounding context (where map information plays a critical role). This preserves interpretability (humans can reason about driving behaviors thanks to having this complete representation) and provides a holistic picture from which ML models can learn to make safe driving decisions. The key is that this representation allows both humans and ML models to reason about driving behaviors.

This representation is powered by a crucial ingredient: maps.

Mapping Matters

Why maps play a central role in the behavioral model of driving

Maps have long played a role in automated and autonomous driving. They’ve traditionally been called the fourth sensor — along with cameras, radar and lidar — which helps a vehicle with localization (where am I), perception (what am I seeing) and planning (how do I get to my destination safely).

Similarly, maps play a critical role in the BMOD. To reason about — and learn from — driving behaviors, one needs to know: Where did this behavior occur? To what degree was it a response to — or at least, in compliance with — the rules of the road? And so on. A map pre-computed offline is how we answer these questions reliably.

Sensory data joins with map data to create the behaviors on which autonomy algorithms train upon.

An alternative is extracting map information in real time. This approach tends to be less robust, so we see it more as having complementary value: online information can augment the content of the offline map.

Defining Standards

Using “medium definition” maps to bring added scalability to the behavioral model of driving (BMOD)

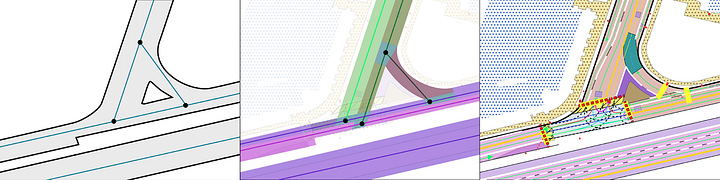

Our initial work on the BMOD was done using traditional HD maps, which are highly precise. For example, they contain centimeter-accurate information — such as the position of lane boundaries — and their production typically requires dedicated survey vehicles and extensive manual curation, making them very expensive and hard to scale. As a consequence, industry trends are pointing toward building autonomy systems that are less reliant on this type of centimeter-level map accuracy.

Enter the medium definition (MD) map. MD maps offer mid-range accuracy, including rich lane- and rules-of-the-road–level metadata at sub-meter accuracy, which we believe is enough to build our BMOD.

Left to right: standard definition (SD) map; medium definition (MD) map; high definition (HD) map

The key benefit of this approach is clear: scalability. MD maps can be produced more quickly and more cheaply than HD maps, using less specialized equipment. Access to Toyota-scale data is our key advantage for keeping these large maps up-to-date.

Using MD maps allows us to build our BMOD at world-scale — helping power our vision of mobility for all.

Our work on the Behavioral Model of Driving illustrates the power and potential of the integrated approach we’ve adopted here at Woven Planet. Connecting expertise from across our automated mapping and autonomy teams — in concert with our Arene OS teams — unlocks possibilities we may otherwise miss.

Come work with us if this type of multidisciplinary teamwork sounds up your alley.